In what will come as a shock only to those who haven't been reading anything about artificial intelligence in the last five years, a new group made up of AI industry people, researchers, and one celebrity, has declared that AI could lead to a global annihilation.

The Center for AI Safety released a brief statement, signed by AI scientists, lots of industry leaders and, for some reason, the singer Grimes. It reads: "Mitigating the risk of extinction from AI should be a global priority alongside other societal-scale risks such as pandemics and nuclear war."

The request for the world to take the threat of AI more seriously is one we've been hearing a lot lately, including during recent Congressional hearings where Sam Altman, the head of Open AI (makers of ChatGPT) asked government leaders to step in and help rein in this technology. (Altman, by the way, is on the list of those who signed the statement.)

Related: New AI Tech That Lets You Speak With the Deceased Proves Black Mirror Was Right About Everything

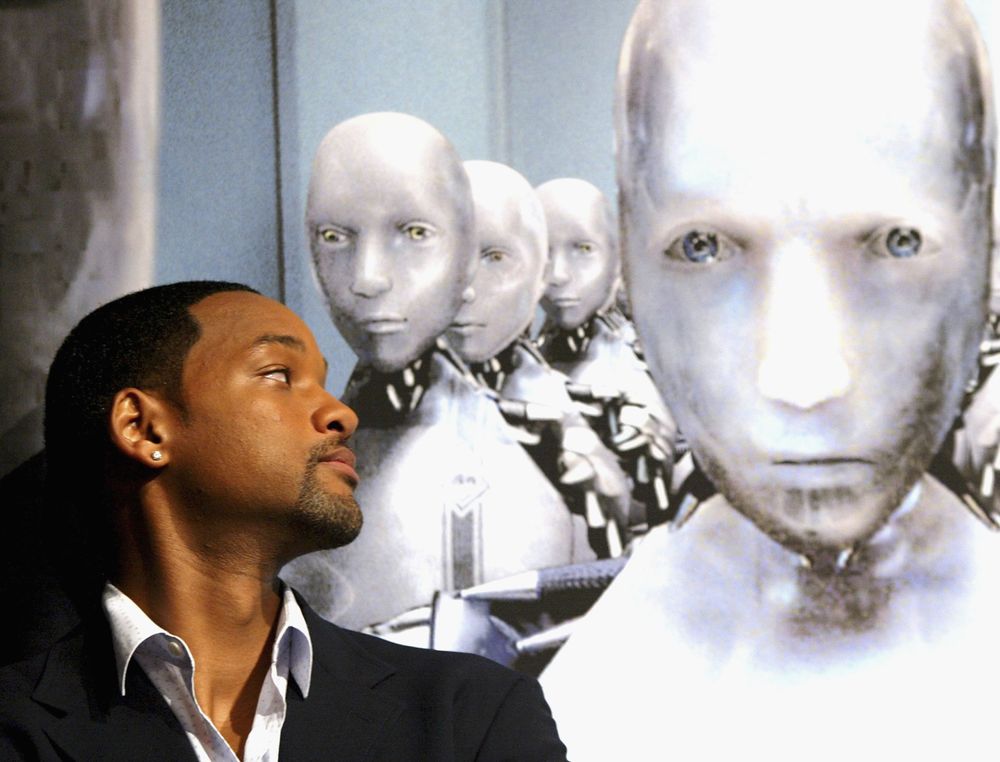

Can the government do anything at this point, though? Isn't the genie out of the bottle? (Speaking of genies, or at least a guy who played one, didn't Will Smith warn us that we might be going down this road nearly two decades ago with I, Robot?)

Didn't Terminator 2 provide enough warnings about SKYNET to maybe nudge scientists to avoid, oh, I don't know, creating SKYNET for real?!

Related: Another Black Man Falsely Accused Thanks to Facial-Recognition Technology

It may seem like AI is this brand-new thing that suddenly feels dangerous, but we've had decades of warning to not do exactly the thing that might lead to such an extinction event, word to Busta. We just ignored them. Good luck to the Center for AI Safety to turn around what may be an inevitable path. They're gonna need it, and so will we all.